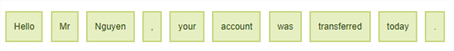

Tokenization

One common task in NLP (Natural Language Processing) is tokenization which is a process in which a text or set of text is broken up into its individual words, called tokens. This can include words, punctuation, numbers, alphanumerics, and so on. During this process, which makes other, more complex NLP functions possible, punctuation is ignored including @#$%^&*()=+[]\ and other special characters. Tokens are then used as the input for other types of analysis or tasks.